Aria, Compose the Future, Product Design

Prompt

Work in teams to design a product interface that can be navigated and controlled entirely through head tracking and facial gestures. This project is both product- and business-focused, challenging conventional input methods and pushing the boundaries of UI/UX design to drive innovation.

Role

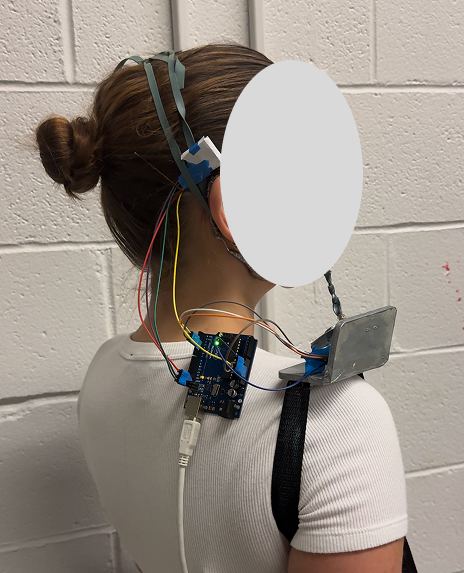

Aria is a wearable, extremity-free instrument that produces sound based on the movement of your head and jaw. The prototype was built with an Arduino Uno, various sensors, wire, tape, and rubber bands. The final design was modeled and rendered in Cinema4D.

The Concept

creating a wearable, touch-free instrument

Aria is a wearable, extremity-free instrument that produces sound based on the movement of your head and jaw. The prototype was built with an Arduino Uno, various sensors, wire, tape, and rubber bands. The final design was modeled and rendered in Cinema4D.

.png)

Objectives

- Rethink Human-Technology Interaction

The core objective was to challenge traditional notions of input by designing an interface that requires no hands, no screens, and no voice. By relying solely on head movement and facial gestures, we explored how subtle, expressive motions could become powerful tools for control—opening the door to more inclusive, adaptive technologies. - Create a Wearable, Extremity-Free Instrument

We aimed to design a music interface that functioned entirely through motion, transforming the user’s head and jaw movements into dynamic sound. The goal was not just functionality, but expressiveness—treating the human body itself as a performance tool, capable of nuance and rhythm. - Prototype with Accessible Tools

Using an Arduino Uno, sensors, and everyday materials like tape and rubber bands, we built a working prototype that captured live motion input. This allowed us to quickly test, iterate, and explore how physical gestures could map to musical outputs, even with limited resources. - Visualize the Future of the Product

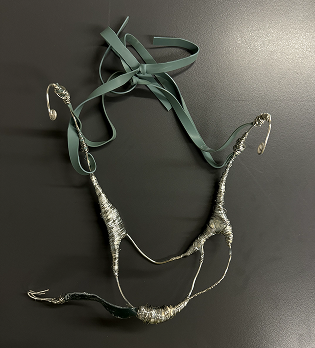

Beyond the prototype, we modeled and rendered a refined version of the wearable in Cinema4D to imagine what a final product might look like. This helped bridge the gap between rough experimentation and polished concept—offering a vision of how this tool could exist in the real world.

Functionality Goals

We needed a device capable of two main functions:

- Tracking head movement (nodding/shaking)

- Tracking jaw movement (open/close)

RIT’s New Media Design program already had several Arduino Unos, but none of the sensors we needed. So we ordered two more parts.

.png)

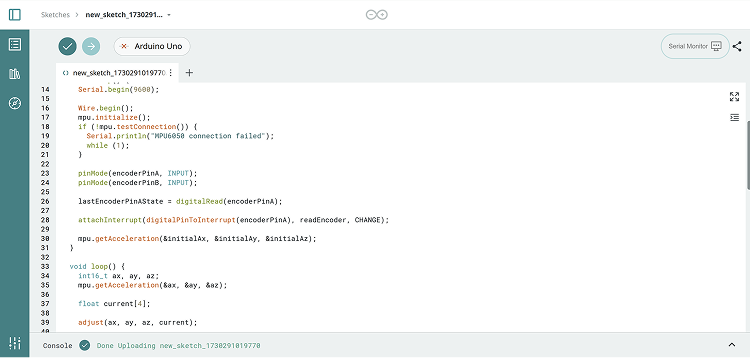

Programming & Touchdesigner

We wrote a C++ script to scale and return the readings from the Arduino, which is then returned TouchDesigner inside of a Serial Dat. A DAT Execute then monitors this node which executes a python script that parses the data into usable numbers. The file then takes these inputs and plays sound with corresponding volume and pitch. It also displays reactive abstract motion graphics.

1.png)

Prototype

Using a combination of a spring-loaded lever and a rack and pinion mechanism, we successfully moved the

rotary encoder by translating jaw movements into rotational motion.

We found that the main tension point had to be positioned between the jaw and gear, with a sturdy yet

flexible structure to withstand this tension while allowing enough jaw movement and adaptability to

different face shapes.